Deep Learning, Google TensorFlow, IBM Watson, and AI conversational systems

This post provides an introduction to some of the topics that will be discussed in upcoming events hosted by the Machine Intelligence Institute of Africa (MIIA), in particular Deep Learning and its applications, tool and platforms such as Google’s TensorFlow and IBM Watson (amongst others), Deep Learning limitations and Quantum computing for Machine Learning.

As mentioned in a previous post “Solving Intelligence, Solving Real-world Problems“, Machine or Artificial intelligence (AI) is not only changing the way we use our computers and smartphones but the way we interact with the real world. It is also one of the key exponential technologies in the Fourth Industrial Revolution. Gartner has recently named AI and Advanced Machine Learning (which includes technologies such as Deep Learning) their #1 Strategic Technology Trend for 2017. Gartner went on to predict that these technologies will begin to increasingly augment and extend virtually every technology-enabled service, thing, or application, and therefore will become the primary battleground for technology vendors through at least 2020.

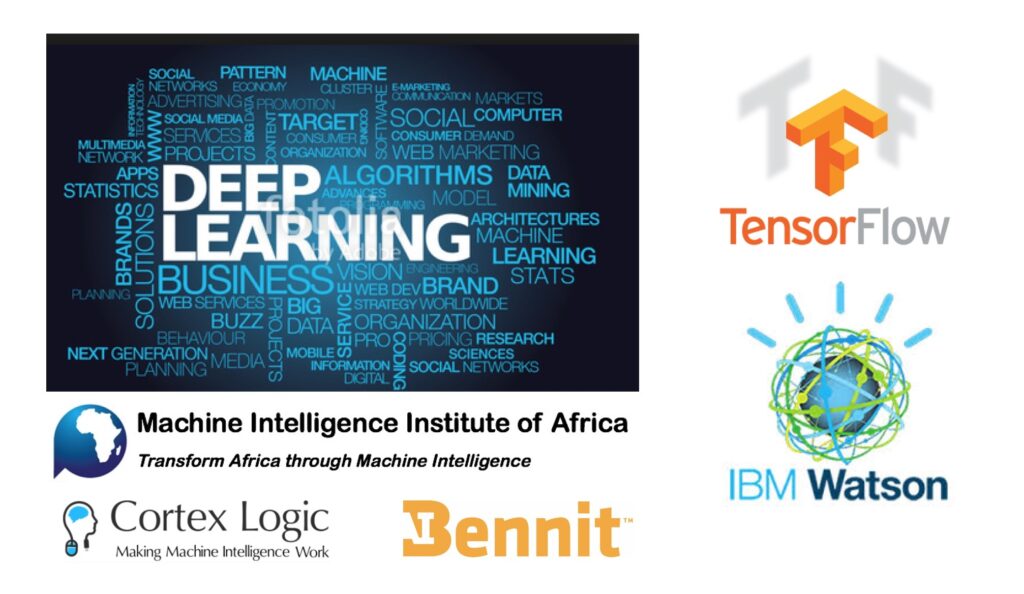

As illustrated in the diagram below, deep learning is a subset of machine learning, which is in turn a subset of AI. Whereas AI is a branch of computer science that develops machines and software that mimics human intelligence, machine learning is concerned with the construction and study of systems that can learn from data and improve at tasks with experience. Deep learning, is a set of algorithms in machine learning that attempt to model high-level abstractions in data by using multi-layered model architectures composed of multiple non-linear transformations. Deep learning excels at identifying patterns in unstructured data, which most people know as media such as images, sound, video and text.

Deep Learning Applications

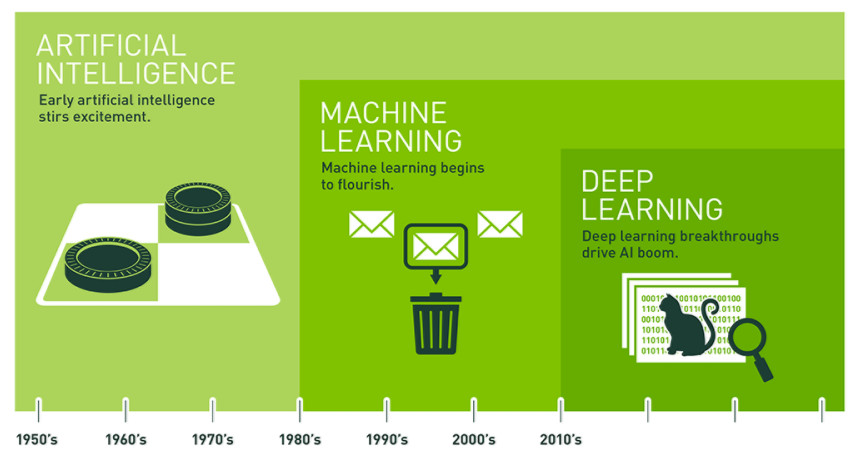

Computers are trained using extremely large historical datasets to help them adapt and learn from prior experience, identify anomalous patterns in large datasets, and improve predictive analysis. Deep learning increasingly addresses a range of computing tasks where programming explicit algorithms is infeasible. These are domains like fraud detection, robo-advisors, smart trading, recommendation pipelines, fully automated call centers, inventory and product review auditing. Deep learning is also extensively used in search, autonomous drones, robot navigation systems, self-driving cars, and robotics in fulfillment centers. It is applied in many areas of AI such as text and speech recognition, image recognition and natural language processing. Computers can now recognize objects in images and video and transcribe speech to text better than humans can. Google replaced Google Translate’s architecture with neural networks, and now machine translation is also closing in on human performance. Google DeepMind’s AlphaGo has beaten a world-class Go player and are being challenged by the world number 1 in China. Computers can also predict crop yield better than the USDA. Artificial intelligence is also making its way into the realm of modern healthcare. Google’s DeepMind is revolutionizing eye care in the United Kingdom, and IBM’s Watson is tackling cancer diagnostics on par with human physicians. Both AI systems use deep learning which is particularly applicable in diagnostics. “Why Deep Learning is suddenly changing your life” provides some high-level background on the Deep Learning revolution. “Solving Intelligence, Solving Real-world Problems” also highlights some companies and organizations involved in advancing the state-of-the-art in Machine Intelligence, and Deep Learning in particular, as well as the Machine Intelligence landscape and some applications.

See also a list of general use cases for Deep Learning in the categories of sound, time series, text, image and video as it pertains to various industries.

A recent post on Topbots shared a number of ways Deep Learning improves your daily life: Netflix dynamically personalized layouts and movie thumbnails; Yelp surfaces the most beautiful photos for any venue; Yahoo ensures you pick the best emoji for any situation; Stitch fix finds you the perfect fashion faster; and Google’s machine translation approaches human levels.

Another blog shared some inspirational applications of Deep Learning: Colorization of black and white images; Adding sounds to silent movies; Automatic machine translation; Object classification in photographs; Automatic handwriting generation; Character text generation; Image caption generation; and Automatic game playing.

Some interesting deep learning applications highlighted on the Two Minute Papers’ Deep Learning channel include: Neural network learns the physics of fluids and smoke; Deep learning program hallucinates videos; Photo geolocation (building a system to determine the location where a photo was taken using just its pixels); Image super-resolution and image restoration (see also celebrity super-resolution); Sentence completion; Adaptive annotation approach: Developing a biomedical entity and relation recognition dataset using a human-into-the-loop approach (during annotation, a machine learning model is built on previous annotations and used to propose labels for subsequent annotation); Emoji suggestions for images; MNIST handwritten numbers in HD; Deep learning solution to the Netflix prize; Curating works of art; More robust neural networks against adversarial examples; Automatic colorization; Recurrent neural network generated music; Various orchestrations of Beethoven’s Ode to Joy; Recurrent neural network writes music and Shakespeare novels; Using Recurrent neural networks for password cracking (learn and guess passwords); Recurrent neural network writes in the style of George RR Martin; Improving YouTube video thumbnails with deep neural nets; Toxicity prediction using deep learning; Prediction of human population responses to toxic compounds by a collaborative competition; A comparison of algorithms and humans for Mitosis Detection (e.g. breast cancer detection application); Multi-agent cooperation and competition with deep reinforcement learning (use as a practical tool for studying the decentralized learning of multiagent systems living in highly complex environments); Automatic essay scoring; Rating selfies with convolution neural networks; and Data-driven structural priors for shape completion (reconstructing complete geometry from a single scan acquired with a low-quality consumer-level scanning device).

For references to Deep Learning applications in scholarly literature, see for example:

- Cornell University Library arXiv.org

- Allen Institute for Artificial Intelligence (AIAI)’s Semantic Scholar

- Google Scholar

Deep Learning Tools and Platforms

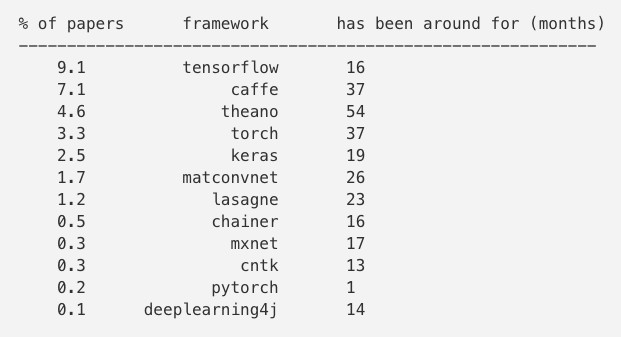

In a recent post “A Peek at Trends in Machine Learning” by Andrej Karpathy (a Research Scientist at OpenAI), he highlights some of the Deep Learning Frameworks that are currently in use based on papers submitted across the arxiv-sanity categories (cs.AI, cs.LG, cs.CV, cs.CL, cs.NE, stat.ML) and uploaded on March 2017 (already 2,000 submissions in these areas). About 10% of all papers submitted in March 2017 mentioned TensorFlow. Given the assumption that papers declare the framework with some fixed random probability independent of the framework, Andrey concludes that about 40% of the community is currently using TensorFlow (or a bit more, if you count Keras with the TensorFlow backend).

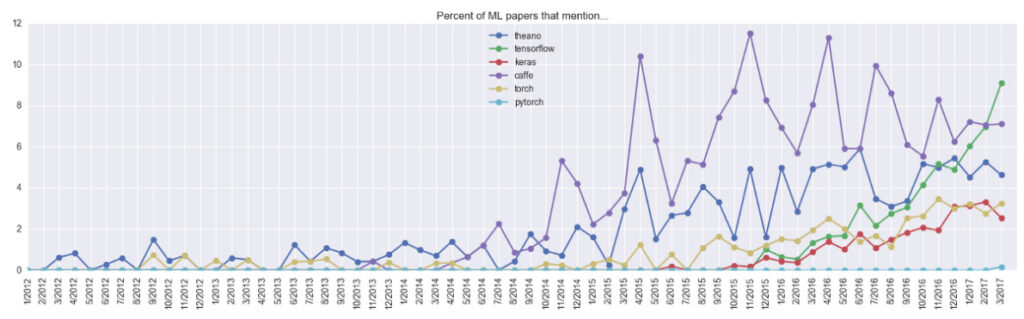

The following plot shows how some of the more popular frameworks evolved over time:

From the plot it is evident that although Theano has been around for a while, its growth has somewhat stalled. Caffe came to the forefront in 2014, but was overtaken by the TensorFlow‘s exponential growth in the last few months. Torch (and the very recent PyTorch) are also climbing up, slow and steady. Andrey’s assessment is that Caffe/Theano will go on a slow decline and TensorFlow growth will become a bit slower due to PyTorch.

Google’s DeepMind has recently announced that it open sourced Sonnet, its object-oriented neural network library. Sonnet does not replace TensorFlow, it is simply a higher-level library that sits on top of TensorFlow and meshes well with DeepMind’s internal best-practices for research.

Some of the deep learning packages Amazon evaluated and that are supported by Amazon Web Services (AWS), are Caffe, CNTK, MXNet, TensorFlow, Theano, and Torch. AWS determined MXNet as the scalable framework and Amazon calling on the open-source community to invest more effort in MXNet. See also their Deep Learning AMI (Amazon Machine Image) Amazon Linux Version for how it is supported and maintained by AWS for use on Amazon Elastic Compute Cloud (Amazon EC2). It is designed to provide a stable, secure, and high performance execution environment for deep learning applications running on Amazon EC2.

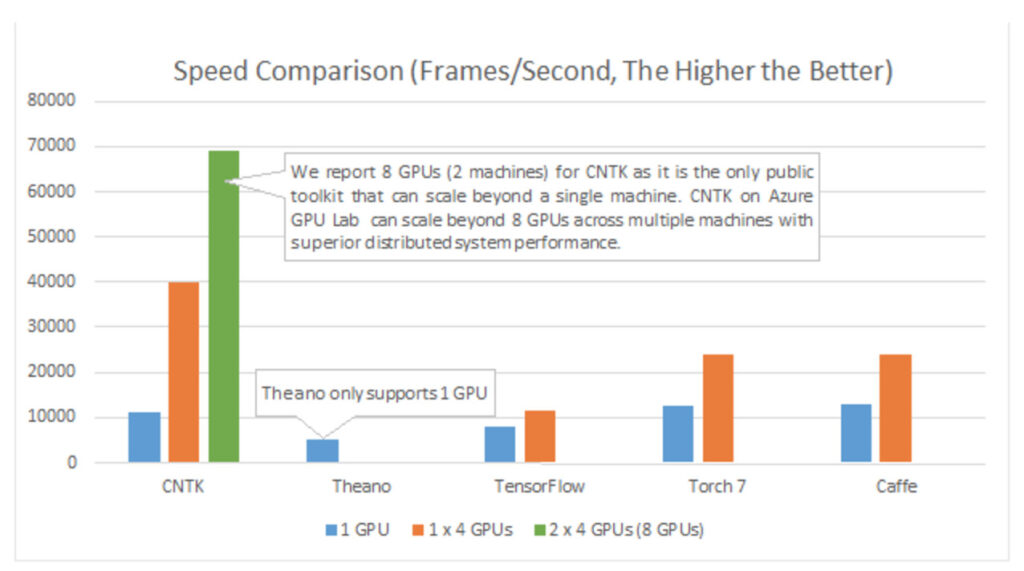

The Microsoft Cognitive Toolkit, previously known as CNTK, has also been open sourced on github and claims that CNTK has proved more efficient in internal tests than some other popular computational toolkits that developers use to create deep learning models for things like speech and image recognition, because it has better communication capabilities (see graph below).

IBM has also pushed Deep Learning with a Watson upgrade and has recently partnered with NVIDIA to create a fast Deep Learning Enterprise solution by pairing IBM PowerAI Software Toolkit with NVIDIA NVLink and GPUDL Libraries optimized for IBM Power architecture. They report that it enables 2X performance breakthroughs on AlexNet with Caffe. According to IBM, PowerAI makes deep learning, machine learning, and AI more accessible and more performant. By combining this software platform for deep learning with IBM Power Systems, enterprises can rapidly deploy a fully optimized and supported platform for machine learning with fast performance. As illustrated in the diagram below the PowerAI platform includes some of the most popular machine learning frameworks and their dependencies, and it is built for easy and rapid deployment.

In 2016 Google has announced that it had designed its own tensor processing unit (TPU), an Application-specific Integrated Circuit (ASIC) designed for high throughput of low-precision arithmetic, to run TensorFlow workloads. Google has now released some performance data for their TPU and how it very favorably compares to Intel’s Haswell CPUs and Nvidia’s K80 (Kepler-based) data center dual GPU for inference workloads. All of Google’s benchmarks measure inference performance as opposed to initial neural network training.

Deep Learning Limitations and the 3rd AI wave

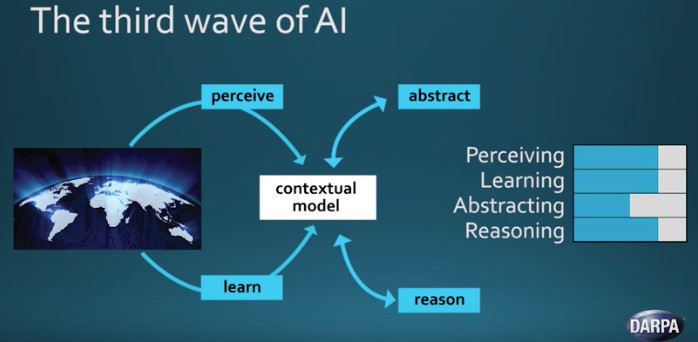

Although we are still scratching the surface with respect to deep learning applications (where deep learning has become the go-to solution for a broad range of applications, often outperforming state-of-the-art), it is also important to understand the limits of deep learning. A recent VentureBeat article mentioned that John Launchbury, a director at DARPA, describes three waves of artificial intelligence:

“1. Handcrafted knowledge, or expert systems like IBM’s Deep Blue or Watson[(before the addition of Deep Learning)].

2. Statistical learning, which includes machine learning and deep learning

3. Contextual adaption, which involves constructing reliable, explanatory models for real-world phenomena using sparse data, like humans do”

Deep learning, which is part of the second AI wave, works well because of the ‘manifold hypothesis’ that refers to how different high-dimensional natural data tend to clump and be shaped differently when visualized in lower dimensions. Deep learning neural networks are like “spreadsheets on steroids” with regards to their ability to mathematically manipulate and separate data clumps or manifolds for classification and prediction purposes. The core limitations of deep learning is abstraction and reasoning, requiring lots of data, dependent on the quality of and biases in the data, deliberately being tricked with adversarial examples, slow training, inability to do planning, and predominantly being used for pattern recognition use cases.

The article “The Next AI Milestone: Bridging the Semantic Gap” also references DARPA’s perspective on AI and discusses the problem of solving “contextual adaptation” and how the evolution of deep learning can help to find solutions that melds symbolic and connectionist systems. DARPA’s third wave model (see below) takes a lot of inspiration from some of their previously announced research initiatives such as Explanatory interfaces and Meta-Learning. Carlos Perez at Intuition Machine argues that the third AI wave is likely to be an evolution of how we do Deep learning (see also “The Only Way to Make Deep Learning Interpretable is to have it Explain Itself”, “The Meta Model and Meta Meta Model of Deep Learning”, and “Biologically Inspired Software Architecture for Deep Learning“).

Apart from developing some more explainable models, Francois Chollet at Google (and AI researcher and developer of the Deep learning library Keras) mentioned the use of deep learning to augment explicit search and formal systems, starting with the field of mathematical proofs to simulate a mathematician’s intuitions of relevant intermediate theorems in a proof (DeepMath project). Yann LeCun, inventor of convolutional neural networks and director of AI research at Facebook, proposes “energy-based models” as a method of overcoming limits in deep learning. Ilya Sutskever, director of Open.AI, has also recently described how they have used “evolutionary strategies” to enable machines to figure out for themselves how to solve a complex task. This approach to machine learning has demonstrated better and more scalable results than state-of-the-art Reinforcement Learning. Geoffrey Hinton (widely called the “father of deep learning”) wants to replace neurons in neural networks with “capsules” that he believes more accurately reflect the cortical structure in the human mind. See also an example of where Stanford researchers created a deep learning algorithm that could boost drug development by using a type of one-shot learning that works off small amounts of data for drug discovery and chemistry research.

In the “The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our World“, Peter Domingos discusses the five tribes of machine learning, Symbolists, Connectionists, Evolutionaries, Bayesians and Analogizers, each with its own master algorithm and the opportunities to create a single algorithm combining key features of all of them by focusing on the overarching patterns of learning phenomena.

Some focus areas in advancing the state-of-the-art in Machine Intelligence are also highlighted in “Solving Intelligence, Solving Real-world Problems“. This post also references the “Five capability levels of Deep Learning Intelligence“: 1. Classification only; 2. Classification with memory; 3. Classification with knowledge; 4. Classification with imperfect knowledge; and 5. Collaborative classification with imperfect knowledge. As mentioned “even for level 2 ‘classification with memory’ systems which effectively corresponds to recurrent neural networks, there is a clear need for improved supervised and unsupervised training algorithms. Unsupervised learning should for example build a causal understanding of the sensory space with temporal correlations of concurrent and sequential sensory signals. Much research still needs to be done with respect to knowledge representations and integrating this with deep learning recurrent neural network systems all the way from level 3 to level 5 systems (classification with knowledge, imperfect knowledge and collaborative systems with imperfect knowledge).”

In the Failures of Deep Learning paper, the authors describe and illustrate four families of problems for which some of the commonly used existing algorithms fail or suffer significant difficulty, as well as provide theoretical insights explaining their source, and how they might be remedied. The families of problems include non-informative gradients, decomposition versus end-to-end training, architecture and conditioning, and flat activations.

At Cornell University’s CSV17 Keynote Conversation on “Reverse-engineering the brain for intelligent machines“, Jeff Hawkins of Numenta, also mentioned the three waves of AI, with Deep Learning being regarded as part of the second wave. Numenta is focused on reverse engineering the neocortex and believes that their HTM Hierarchical Temporal Memory (HTM) technology provides a theoretical framework for both biological and machine intelligence (see also the neuroscience behind the HTM Sensorimotor inference). They believe that HTM is part of the third wave and that understanding how the neocortex works is the fastest path to machine intelligence (studying how the brain works helps us understand the principles of intelligence and build machines that work on the same principles), and creating intelligent machines is important for the continued success of humankind.

It is clear from many recent neuroscience scholarly articles and other ones such as “More Is the Brain More Powerful Than We Thought? Here Comes the Science” that much inspiration and ideas still awaits us to help improve the state-of-the-art in machine intelligence. As mentioned, a team from UCLA recently discovered a hidden layer of neural communication buried within the dendrites where rather than acting as passive conductors of neuronal signals, as previously thought, the scientists discovered that dendrites actively generate their own spikes—five times larger and more frequently than the classic spikes stemming from neuronal bodies (soma). This suggests that learning may be happening at the level of dendrites rather than neurons, using fundamentally different rules than previously thought. This hybrid digital-analog, dendrite-soma, duo-processor parallel computing is highly intriguing and can lead to a whole new field of cognitive computing. These findings could galvanize AI as well as the engineering new kinds of neuron-like computer chips. In parallel to this, see also the “We Just Created an Artificial Synapse That Can Learn Autonomously” article that mentions a team of researchers from National Center for Scientific Research (CNRS) that has developed an artificial synapse called a memristor directly on a chip that are capable of learning autonomously and can improve how fast artificial neural networks learn.

Quantum Computing and Deep Learning

For an introduction to Quantum computing and Deep Learning, see A Quantum Boost for Machine Learning” where Maria Schuld describes how researchers are enhancing machine learning by combining it with quantum computation. In “Commercialize quantum technologies in five years” it is reported that Google’s Quantum AI Laboratory has set out investment opportunities on the road to the ultimate quantum machines. In the article, Google highlights three commercially viable uses for early quantum-computing devices: quantum simulation (e.g. modelling chemical reactions and materials), quantum-assisted optimization (e.g., online recommendations and bidding strategies for advertisements use optimization algorithms to respond in the most effective way to consumers’ needs and changing markets; logistics companies need to optimize their scheduling, planning and product distribution daily; improve patient diagnostics for health care), and quantum sampling (e.g., sampling from probability distributions is widely used in statistics and machine learning). They reckon that faster computing speeds in these areas would be commercially advantageous in sectors from artificial intelligence to finance and health care. IBM has also recently announced its move into building commercially-available quantum computing systems, which they dub as “IBM Q” and plan to make available on the IBM Cloud platform. IBM has highlighted AI, cloud security, supply chain logistics (e.g., calculating a massive volume of possibilities to help optimize fleet operations, particularly during risky times, such as during the holiday season), and financial services as high-value targets.

Machine Intelligence Institute of Africa (MIIA)

Herewith some announcements with respect to the upcoming MIIA Meetups where the main themes will be about Deep Learning and its applications as well as platforms such as Google’s Tensorflow and IBM Watson. See also link below about the use of quantum computation to enhance machine learning.

MIIA Meetup in Cape Town on Wednesday 3 May 2017 at 6pm

Agenda

1. Deep Learning and its applications – Dr Jacques Ludik – MIIA, Cortex Logic, Bennit.AI

2. Demonstration of Deep Learning examples using Google’s TensorFlow – Dr Jacques Ludik – MIIA, Cortex Logic, Bennit.AI

- Google TensorFlow

- Getting started with Tensorflow

- Learn TensorFlow and deep learning, without a Ph.D.

- TensorFlow examples

- Introduction to Deep Neural Networks with Keras and Tensorflow

- Keras examples

- Tensorboard

- https://github.com/llSourcell/how_to_use_tensorboard_live

- tensorboard –logdir=’/tmp/mnist_tutorial/’

- http://10.0.0.15:6006

- Tensorflow Playground

- Convolution Neural Networks

- Deep Reinforcement Learning

- Flappy Bird – Deep Reinforcement Learning (Deep Q-Network)

3. IBM Watson

– Insights from IBM’s InterConnect Conference in Las Vegas – Rikus Combrinck, Senior Data Scientist at OLSPS Analytics (M.Eng Computer Engineering)

- https://hackmd.io/s/rJhIJBXk- (document format)

- https://hackmd.io/p/rJhIJBXk- (slide format)

– IBM Watson discussion (including sharing experience with IBM Watson for developing Intelligent Virtual Assistants)

4. Demonstration of a state-of-the-art AI conversational system – Rikus Combrinck, Senior Data Scientist at OLSPS Analytics (M.Eng Computer Engineering)

- https://hackmd.io/s/r1XJkBmy- (document format)

- https://hackmd.io/p/r1XJkBmy- (slide format)

Registration via the MIIA Meetup – Cape Town:

Venue: CiTi, Bandwidth Barn, Block B, 3rd Floor, Woodstock Exchange, 66-68 Albert Road, Woodstock, Cape Town

Sponsors: CiTi, Cortex Logic

MIIA Meetup in Sandton on Thursday 11 May 2017 at 6:30pm

Agenda

1. Deep Learning and its applications – Dr Jacques Ludik – MIIA, Cortex Logic, Bennit.AI

2. Demonstration of Deep Learning examples using Google’s TensorFlow – Dr Jacques Ludik – MIIA, Cortex Logic, Bennit.AI

3. IBM Watson

– Insights from IBM’s InterConnect Conference in Las Vegas – Rikus Combrinck, Senior Data Scientist at OLSPS Analytics (M.Eng Computer Engineering)

- https://hackmd.io/s/SJr4CR-e- (document format)

- https://hackmd.io/p/SJr4CR-e- (slide format)

– IBM Watson discussion (including sharing experience with IBM Watson for developing Intelligent Virtual Assistants)

4. Demonstration of a state-of-the-art AI conversational system – Rikus Combrinck, Senior Data Scientist at OLSPS Analytics (M.Eng Computer Engineering)

- https://hackmd.io/s/r1XJkBmy- (document format)

- https://hackmd.io/p/r1XJkBmy- (slide format)

You can also register via the MIIA Meetup – Jhb/Pta:

Venue: Equinox, 15 Alice Lane, Absa Capital, North Building, Sandton, Gauteng, South Africa

Sponsors: Barclays Equinox Events, Cortex Logic

Photos of both MIIA events: https://www.facebook.com/media/set/?set=oa.1185064868289550&type=3

A Quantum Boost for Machine Learning

We’ve already shared on our MIIA Slack channel the article, A Quantum Boost for Machine Learning by Maria Schuld (a PhD student researching machine learning and quantum information processing at the University of Kwazulu-Natal). In the article she describes how researchers are enhancing machine learning by combining it with quantum computation. We aim to share some of this work also via an upcoming MIIA event.

“How can AI disrupt industries?” Panel Discussion – 25 May at 6pm in Cape Town (Far-ventures)

MIIA will also be represented at this panel discussion (hosted by Far Ventures on how Artificial Intelligence can disrupt industries. More about this event in follow-up communication.

For more details see:

- https://www.facebook.com/events/2282787705278703/

- https://www.quicket.co.za/events/28216-far-ventures-talks-1-how-can-artificial-intelligence-disrupt-industries/#/

The event will be a panel discussion with the following speakers:

- Jacques Ludik, Founder & President of the Machine Intelligence Institute of Africa (MIIA) / Founder & CEO of @CortexLogic / CTO Bennit.ai

- Antoine Paillusseau, Co-Founder @FinChatBot

- Alex Conway, CTO @NumberBoost

- Ryan Falkenberg, Co-CEO Clevva

- Daniel Schwartzkopff, Co-Founder DataProphet

MIIA AI / Data Science Hackathon – May/June 2017

MIIA also plans to host an AI / Data Science Hackathon during May/June 2017 time frame. Anyone interested to participate is welcome to email us at info@machineintelligenceafrica.org. More about this event in follow-up communication.

Deep Learning Indaba in Johannesburg – 11-15 September 2017

http://www.deeplearningindaba.com/

http://www.deeplearningindaba.com/apply.html

Key Dates:

- Applications: 17 April – 31 May 2017

- Acceptance notification: 15 June 2017

- Registration opens: 15 June 2017

ABOUT MACHINE INTELLIGENCE INSTITUTE OF AFRICA

Machine intelligence Institute of Africa (MIIA):

- http://machineintelligenceafrica.org

- Community: http://machineintelligenceafrica.org/members/

- Events: http://machineintelligenceafrica.org/activities/events/

- Projects: http://machineintelligenceafrica.org/activities/projects/

- Partners: http://machineintelligenceafrica.org/partners/

- Blog: http://machineintelligenceafrica.org/blog/

- MIIA YouTube Channel (Playlist): http://www.youtube.com/playlist?list=PLOqigXLyHjNYf8R4WIM7HMVbQpQ03QNu-

Some Recent MIIA posts

- AI in Manufacturing and Data Science/IoT hackathon on Mining data

- Data Science and Machine Intelligence use cases in Africa 2017

- Machine Intelligence Institute of Africa collaboration with Data Science Nigeria

- Solving Intelligence, Solving Real-world Problems

- Artificial Intelligence and Data Science use cases in Africa

- Machine Intelligence Institute of Africa

- Artificial Intelligence in Finance, Education, Healthcare, Manufacturing, Agriculture, and Government

- Artificial Intelligence at the centre of MIIA partner activities with Silicon Cape, Rise Africa, and Insights2Impact

Joining Machine Intelligence Institute of Africa (MIIA)

- Anyone interested to join MIIA and/or participate in using smart technologies to help address African problems such as those in education, finance, healthcare, energy, agriculture and unemployment is welcome to do this here. See How to participate for more details on various ways to help MIIA execute its mission.

- View the current MIIA Community on the MIIA website as well as MIIA communications on Slack, the LinkedIn group, Meetup, Google+, FaceBook, and Twitter.

- MIIA Meetup in Cape Town: https://www.meetup.com/Machine-Intelligence-Institute-of-Africa/

- MIIA Meetup in Johannesburg/Pretoria:https://www.meetup.com/Machine-Intelligence-Institute-of-Africa-Jhb-Pta/

Bennit.AI

- http://bennit.ai/

- Bennit.AI is a self-learning, highly personalized virtual production assistant specifically designed for industry, making the time and efficiency of industrial users its most important mission, every minute of every day. Bennit A.I. layers across existing technologies to drastically simplify and improve access to information, helping industrial users make better decisions and improve business outcomes.

Cortex Logic

- http://cortexlogic.com/

- Cortex Logic is a machine intelligence software & solutions company that solves real-world problems through delivering state-of-the-art Machine Intelligence based solutions such as intelligent virtual assistants and advisors, fraud detection, churn prediction, smart risk scoring, smart trading, real-time customer insights, smart recommendations and purchase prediction, and smart payment for finance, healthcare, education, retail, telecoms, industrial and public sector as well as other industries where the automation of tasks can lead to economic benefit, scalability and productivity. Cortex Logic helps businesses and organizations not only survive, but thrive in the Smart Technology Era. It also aims to contribute towards solving intelligence through advancing the state-of-the-art in machine intelligence and building cognitive systems that are contextually aware, learn at scale, support unsupervised learning where possible, reason with purpose and interact with humans naturally.